This is a research dissertation project for the Interactive Design and Electronic Arts master program at University of Sydney. The research looked into the rising potential of drone deliveries and how humans should interact with them.

Background

Commercial drone delivery application such as the Amazon prime is soon becoming a reality however there is little research done on how humans should interact with an delivery drone. Current research has mixed findings regarding the comfort levels of close proximity human to drone interactions. However a few research suggest the aesthetics appearance of the drone can be a factor to human comfort levels and humans felt more comfortable if they understand the intention of the drone. Current studies has indicated that the intention of the drone could be conveyed through the use of animated movements and light effects. However no research have been conducted on the combination of movements, light and sound effects.

Research Objective

My research aims is to take a glimpse into the future where drone is self sufficient and smart and see what drone delivery user experience would be like. In particular address the following research question:

What effects does the use of light and sound effects have on the user experience?

How can the combination of lights, sound and drone movements communicate the intent of a drone?

What are user's preference in terms of modality used for communicating the drone's action?

Drone design and user scenario design

The Drone design is based on current literature and design trend. To develop an animated user scenario, a CAD model drone was made using Solidworks and the animation of 2 user scenarios were out together through the use of Adobe After Effects.

Two user scenario animation were made. Scenario A is the baseline where the drone can only convey intent via movements and does not have the ability to communicate intent with light and sound effect. Scenario B is where the drone have the ability to communicate intent with light and sound effects on top of its animated movements.

CAD Process

CAD Process

CAD animation via Keyshot

Animation put together in After effects

Animation scenario A (baseline)

Animation scenario B (Light and sound effects)

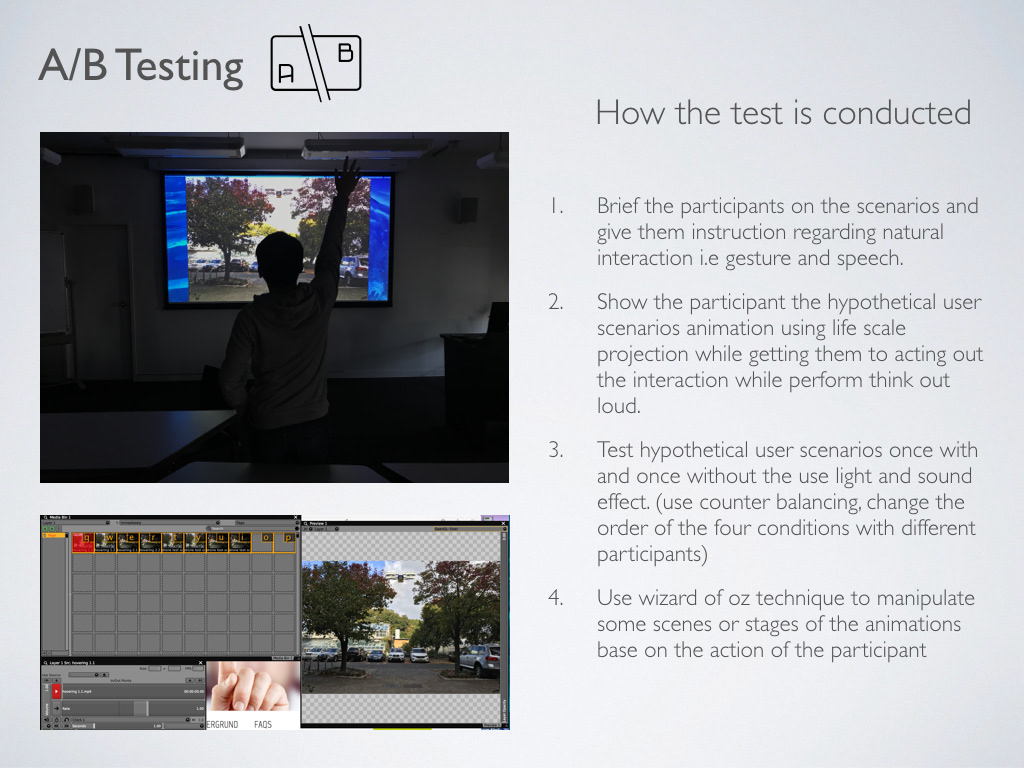

A/B Testing

The animated user scenario are used for the A/B testing. The participant are shown each user scenario A and B using life scale projection using the following setup and instruction below.

Data Collections

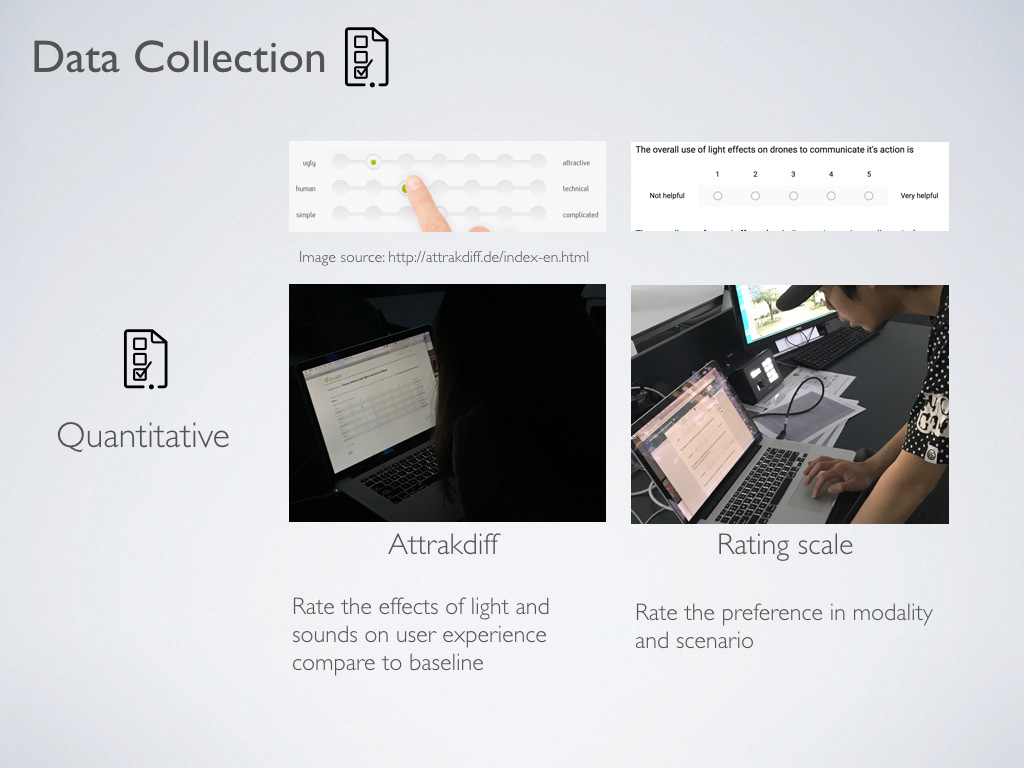

Both quantitative and qualitative data were collected from the A/B testing.

Quantitative data were gathered through the use of Attrakdiff differential scale questionnaire and a Rating scale questionnaire. The Participants complete a Attrakdiff questionnaire following each scenario seen. A rating scale questionnaire is complete once the participant has seen both scenario and completed both Attrakdiff questionnaire for scenario A and B. The aim of the Attrakdiff questionnaire is to rate the effects of light and sound effects on the user experience compare to the baseline. The rating scale is used to rate the preference in modality in both the user scenarios.

Qualitative data were collected through observation and structured interviews at the end of both user scenarios. This is to gain insight on the overall user experience.

Result and making sense of the data.

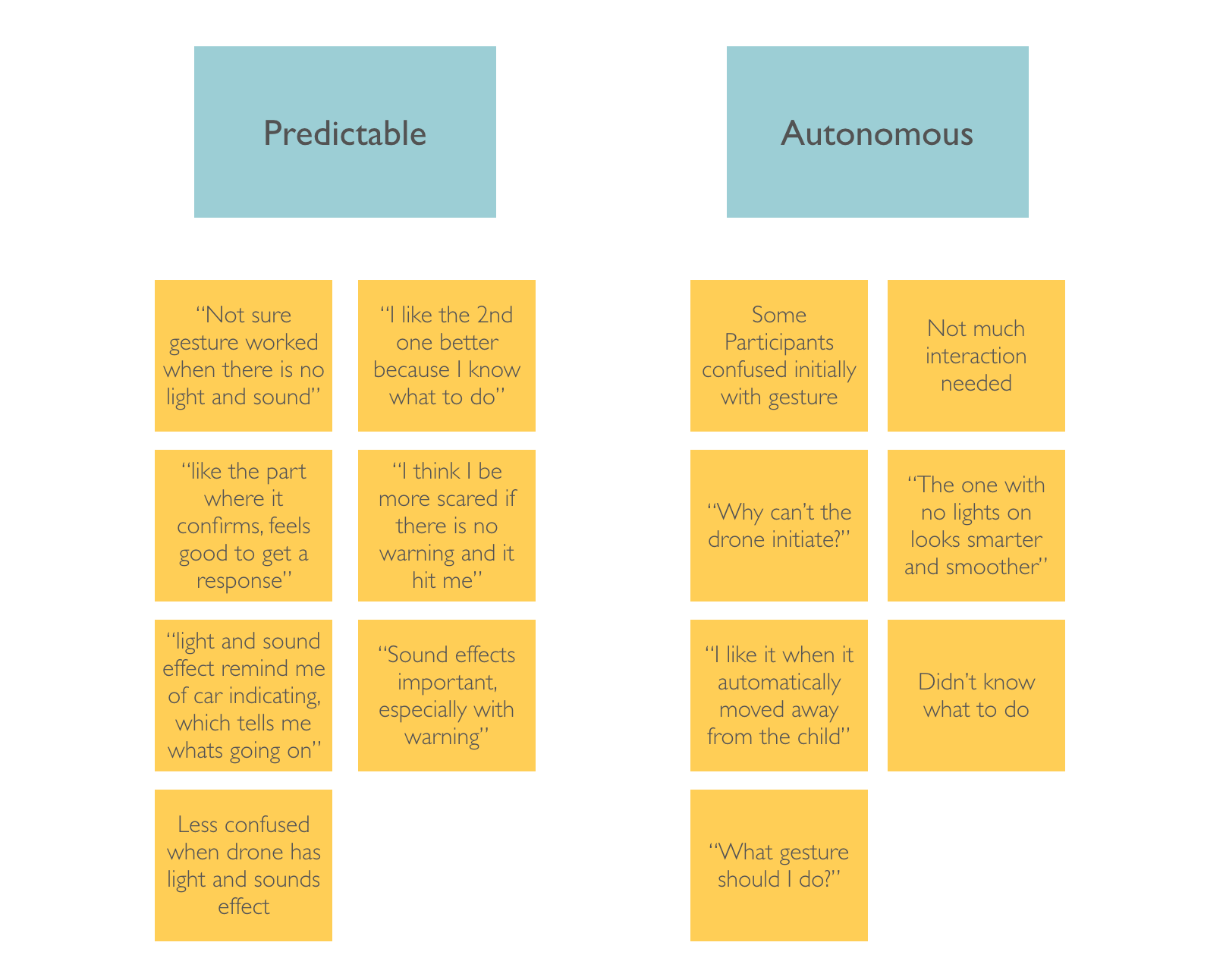

The results from the AttrakDiff test shows that the user prefer the experience with the drone that uses light and sound effects to communicate intent (scenario B) over the one that did not (scenario A). The interview data and the AttrakDiff data correlates in that user like the experience where they feel the drone was more predictable and feels more autonomous. The baseline scenario where the animation did not have light and sound effect were more confusing for the user. The rating scale did not find one particular modality more significantly preferable then the other which suggest the combination of these modality is important.

The research concludes that drone to human communication is an important step in human and drone interaction, especially the use of multi-modality approach such as movement, light and sound effects.

Following the results from the A/B testing and feedback gathered on the user scenarios. Some iteration were made for the final user scenario.